HBase vs. Cassandra: NoSQL Battle!

Distributed, scalable databases are desperately needed these days. From building massive data warehouses at a social media startup, to protein folding analysis at a biotech company, "Big Data" is becoming more important every day. While Hadoop has emerged as the de facto standard for handling big data problems, there are still quite a few distributed databases out there and each has their unique strengths.

Two databases have garnered the most attention: HBase and Cassandra. The split between these equally ambitious projects can be categorized into Features (things missing that could be added any at time), and Architecture (fundamental differences that can't be coded away). HBase is a near-clone of Google's BigTable, whereas Cassandra purports to being a "BigTable/Dynamo hybrid".

In my opinion, while Cassandra's "writes-never-fail" emphasis has its advantages, HBase is the more robust database for a majority of use-cases. Cassandra relies mostly on Key-Value pairs for storage, with a table-like structure added to make more robust data structures possible. And it's a fact that far more people are using HBase than Cassandra at this moment, despite both being similarly recent.

Let's explore the differences between the two in more detail…

CAP and You

This article at Streamy explains CAP theorem (Consistency, Availability, Partitioning) and how the BigTable-derived HBase and the Dynamo-derived Cassandra differ.

Before we go any further, let's break it down as simply as possible:

- Consistency: "Is the data I'm looking at now the same if I look at it somewhere else?"

- Availability: "What happens if my database goes down?"

- Partitioning: "What if my data is on different networks?"

CAP posits that distributed systems have to compromise on each, and HBase values strong consistency and High Availability while Cassandra values Availability and Partitioning tolerance. Replication is one way of dealing with some of the design tradeoffs. HBase does not have replication yet, but that's about to change — and Cassandra's replication comes with some caveats and penalties.

Let's go over some comparisons between these two datastores:

Feature Comparisons

Processing

HBase is part of the Hadoop ecosystems, so many useful distributed processing frameworks support it: Pig, Cascading, Hive, etc. This makes it easy to do complex data analytics without resorting to hand-coding. Efficiently running MapReduce on Cassandra, on the other hand, is difficult because all of its keys are in one big "space", so the MapReduce framework doesn't know how to split and divide the data natively. There needs to be some hackery in place to handle all of that.

In fact, here's some code from a Cassandra/Hadoop Integration patch:

+ /*

+ FIXME This is basically a huge kludge because we needed access to

+ cassandra internals, and needed access to hadoop internals and so we

+ have to boot cassandra when we run hadoop. This is all pretty

+ fucking awful.

+

+ P.S. it does not boot the thrift interface.

+ */

This gives me The Fear.

Bottom line? Cassandra may be useful for storage, but not any data processing. HBase is much handier for that.

Installation & Ease of Use

Cassandra is only a Ruby gem install away. That's pretty impressive. You still have to do quite a bit of manual configuration, however. HBase is a .tar (or packaged by Cloudera) that you need to install and setup on your own. HBase has thorough documentation, though, making the process a little more straightforward than it could've been.

HBase ships with a very nice Ruby shell that makes it easy to create and modify databases, set and retrieve data, and so on. We use it constantly to test our code. Cassandra does not have a shell at all — just a basic API. HBase also has a nice web-based UI that you can use to view cluster status, determine which nodes store various data, and do some other basic operations. Cassandra lacks this web UI as well as a shell, making it harder to operate. (ed: Apparently, there is now a shell and pretty basic UI — I just couldn't find 'em).

Overall Cassandra wins on installation, but lags on usability.

Architecture

The fundamental divergence of ideas and architecture behind Cassandra and HBase drives much of the controversy over which is better.

Off the bat, Cassandra claims that "writes never fail", whereas in HBase, if a region server is down, writes will be blocked for affected data until the data is redistributed. This rarely happens in practice, of course, but will happen in a large enough cluster. In addition, HBase has a single point-of-failure (the Hadoop NameNode), but that will be less of an issue as Hadoop evolves. HBase does have row locking, however, which Cassandra does not.

Apps usually rely on data being accurate and unchanged from the time of access, so the idea of eventual consistency can be a problem. Cassandra, however, has an internal method of resolving up-to-dateness issues with vector clocks — a complex but workable solution where basically the latest timestamp wins. The HBase/BigTable puts the impetus of resolving any consistency conflicts on the application, as everything is stored versioned by timestamp.

Another architectural quibble is that Cassandra only supports one table per install. That means you can't denormalize and duplicate your data to make it more usable in analytical scenarios. (edit: this was corrected in the latest release) Cassandra is really more of a Key Value store than a Data Warehouse. Furthermore, schema changes require a cluster restart(!). Here's what the Cassandra JIRA says to do for a schema change:

- Kill Cassandra

- Start it again and wait for log replay to finish

- Kill Cassandra AGAIN

- Make your edits (now there is no data in the commitlog)

- Manually remove the sstable files (-Data.db, -Index.db, and -Filter.db) for the CFs you removed, and rename files for CFs you renamed

- Start Cassandra and your edits should take effect

With the lack of timestamp versioning, eventual consistency, no regions (making things like MapReduce difficult), and only one table per install, it's difficult to claim that Cassandra implements the BigTable model.

Replication

Cassandra is optimized for small datacenters (hundreds of nodes) connected by very fast fiber. It's part of Dynamo's legacy from Amazon. HBase, being based on research originally published by Google, is happy to handle replication to thousands of planet-strewn nodes across the 'slow', unpredictable Internet.

A major difference between the two projects is their approach to replication and multiple datacenters. Cassandra uses a P2P sharing model, whereas HBase (the upcoming version) employs more of a data+logs backup method, aka 'log shipping'. Each has a certain elegance. Rather than explain this in words, here comes the drawings:

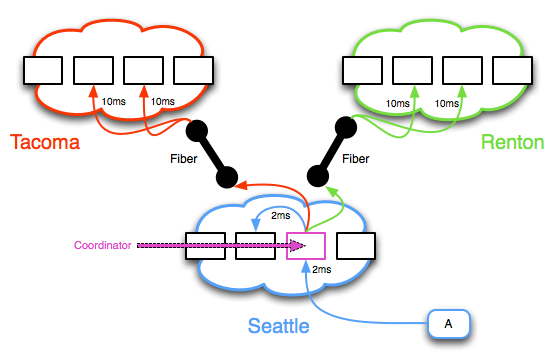

This first diagram is a model of the Cassandra replication scheme.

- The value is written to the "Coordinator" node

- A duplicate value is written to another node in the same cluster

- A third and fourth value are written from the Coordinator to another cluster across the high-speed fiber

- A fifth and sixth value are written from the Coordinator to a third cluster across the fiber

- Any conflicts are resolved in the cluster by examining timestamps and determining the "best" value.

The major problem with this scheme is that there is no real-world auditability. The nodes are eventually consistent — if a datacenter ("DC") fails, it's impossible to tell when the required number of replicas will be up-to-date. This can be extremely painful in a live situation — when one of your DCs goes down, you often want to know *exactly* when to expect data consistency so that recovery operations can go ahead smoothly.

It's important to note that Cassandra relies on high-speed fiber between datacenters. If your writes are taking 1 or 2 ms, that's fine. But when a DC goes out and you have to revert to a secondary one in China instead of 20 miles away, the incredible latency will lead to write timeouts and highly inconsistent data.

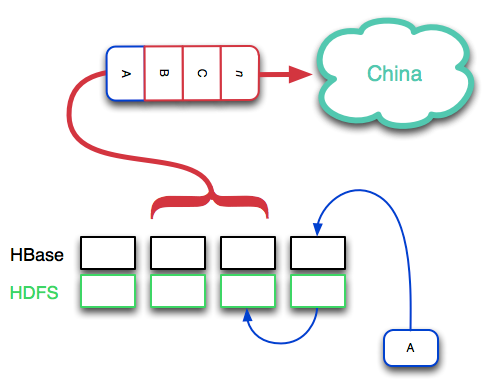

Let's take a look at the HBase replication model (note: this is coming in the .21 release):

What's going on here:

- The data is written to the HBase write-ahead-log in RAM, then it is then flushed to disk

- The file on disk is automatically replicated due to the Hadoop Filesystem's nature

- The data enters a "Replication Log", where it is piped to another Data Center.

With HBase/Hadoop's deliberate sequence of events, consistency within a datacenter is high. There is usually only one piece of data around the same time period. If there are not, then HBase's timestamps allow your code to figure out which version is the "correct" one, instead of it being chosen by the cluster. Due to the nature of the Replication Log, one can always tell the state of the data consistency at any time — a valuable tool to have when another data center goes down. In addition, using this structure makes it easy to recover from high-latency scenarios that can occur with inter-continental data transfer.

Knowing Which To Choose

The business context of Amazon and Google explains the emphasis on different functionality between Cassandra and HBase.

Cassandra expects High Speed Network Links between data centers. This is an artifact of Amazon's Dynamo: Amazon datacenters were historically located very close to each other (dozens of miles apart) with very fast fiber optic cables between them. Google, however, had transcontinental datacenters which were connected only by the standard Internet, which means they needed a more reliable replication mechanism than the P2P eventual consistency.

If you need highly available writes with only eventual consistency, then Cassandra is a viable candidate for now. However, many apps are not happy with eventual consistency, and it is still lacking many features. Furthermore, even if writes do not fail, there is still cluster downtime associated with even minor schema changes. HBase is more focused on reads, but can handle very high read and write throughput. It's much more Data Warehouse ready, in addition to serving millions of requests per second. The HBase integration with MapReduce makes it valuable, and versatile.

By

By